The nature of science

Over the course of the (relatively brief) history of this blog, I’ve covered a number of varied topics. Many of these have been challenging to write about – either because they are technically-inclined and thus require significant effort to distill down to sensibility and without jargon; or because they address personal issues related to mental health or artistic expression. But despite the nature of these posts, this week’s blog has proven to be one of the most difficult to write, largely because it demands a level of personal vulnerability, acceptance of personality flaws and a potentially self-deprecating message. Alas, I find myself unable to ignore my own perceived importance of the topic.

It should come as no surprise to any reader, whether scientifically trained or not, that the expectation of scientific research is one of total objectivity, clarity and accuracy. Scientific research that is seen not to meet determined quotas of these aspects is undoubtedly labelled ‘bad science’. Naturally, of course, we aim to maximise the value of our research by addressing these as best as can be conceivably possible. Therein, however, lies the limitation: we cannot ever truly be totally objective, nor clear, nor accurate with research, and acceptance and discussion of the limitations of research is a vital aspect of any paper.

The imperfections of science

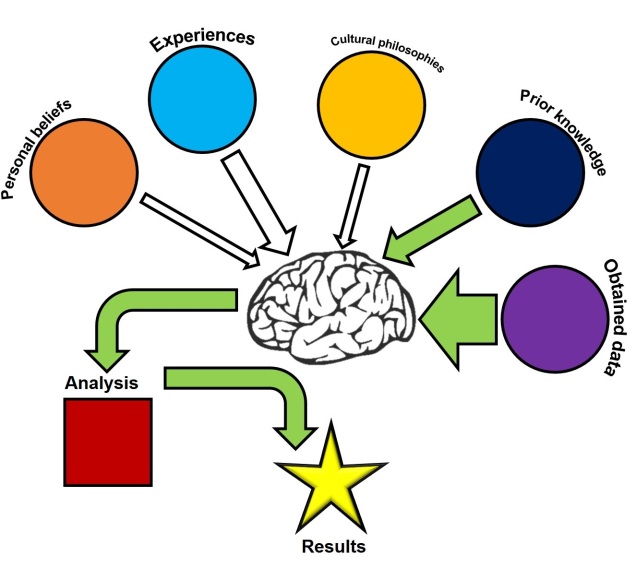

The basic underpinning of this disjunction lies with the people that conduct the science. Because while the scientific method has been developed and improved over centuries to be as objective, factual and robust as possible, the underlying researchers will always be plagued to some degree by subjectivism. Whether we consciously mean to or not, our prior beliefs, perceptions and history influence the way we conduct or perceive science (hopefully, only to a minor extent).

Additionally, one of the drawbacks of being mortal is that we are prone to making mistakes. Biology is never perfect, and the particularly complex tasks and ideas we assign ourselves to research inevitably involve some level of incorrectness. But while that may seem to fundamentally contradict the nature of science, I argue that is in fact not just a reality of scientific research, but also a necessity for progression.

Impostor syndrome

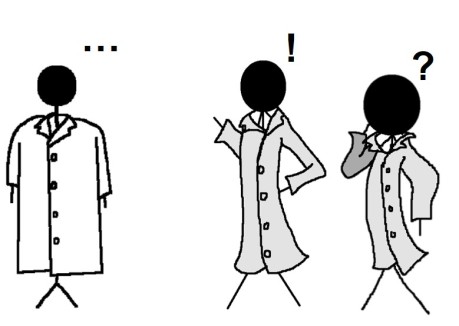

One widely realised manifestation of this disjunction between idealistic science and practical science, and one particularly felt by researchers in training such as post-graduate students, is referred to as ‘impostor syndrome’. This involves the sometimes subversive (and sometimes more overtly) feeling of inadequacy when we compare ourselves to a wider crowd. It is the feeling of not belonging in a particular social or professional group due to a lack of experience, talent or other ‘right’ characteristics. This is particularly pervasive in postgraduate students as we inevitably interact and compare ourselves to those we aspire to be like – postdoctoral researchers, professors, or other more established researchers – who are naturally more experienced in the field. The jarring disjunction of our own capability, often inaccurately assumed to be a proxy of intelligence, leads many to feel incapable or inadequate to be a ‘real’ scientist.

It cannot be overstated that impostor syndrome is often the result of mental health issues and a high-pressure, demanding academic system, and rarely a rational perception. In many cases, we see only the best aspects of scientific research (both for academic students and the general public), a rose-coloured view of process. What we don’t see, however, is the series of failures and missteps that have led to even the best of scientific outcomes, and may assume that they didn’t happen. This is absolutely false.

Analysis paralysis

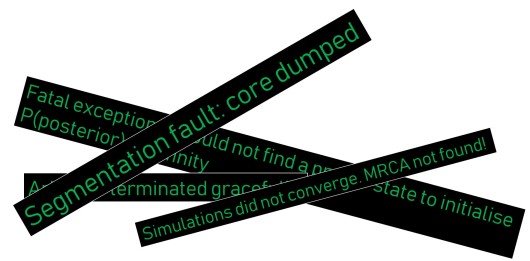

Other tangible impacts of impostor syndrome and self-induced perfectionism is the suppression of progressive work. By this I mean the typical ‘procrastinating’ behaviour that comes about from perfectionism: that we often prevent ourselves from moving forward if we perceive that there might be (however minor) issues with our work. Within science, this often involves inane amounts of reading and preparing on how to run an analysis without actually running anything. This is what has been called ‘analysis paralysis’, and disguises inactivity under the pretence that the student is still learning the ropes.

The reality is that trying to predict the multitude of factors and problems one can run into when conducting an analysis is a monolithic task. Some aspects relevant to a particular dataset or analysis are unlikely to be discussed or clearly referenced in the literature, and thus difficult to anticipate. Problem solving is often more effective as a reactive, rather than proactive, measure by allowing researchers to respond to an issue when it arises instead of getting bogged down in the astronomical realm of “things that could possibly go wrong.”

Drawing on personal experience, this has led to literal months of reading and preparing data for running models only to have the first dozens of attempts not run or run incorrectly due to something as trivial as formatting. The lesson learnt is that I should have just tried to run the analysis early, stuffed it all up, and learnt from the mistakes with a little problem solving. No matter how much reading I did, or ever could do, some of these mistakes would never have been able to be explicitly predicted a priori.

Why failure is conducive to better research

While we should always strive to be as accurate and objective as possible, sometimes this can be counteractive to our own learning progress. The rabbit holes of “things that could possibly go wrong” run very, very deep and if you fall down them, you’ll surely end up in a bizarre world full of odd distractions, leaps of logic and insanity. Under this circumstance, I suggest allowing yourself to get it wrong: although repeated failures are undoubtedly damaging to the ego and confidence, giving ourselves the opportunity to make mistakes and grow from them ultimately allows us to become more productive and educated than if we avoided them altogether.

Speaking at least from a personal anecdote (although my story appears corroborated with other students’ experiences), some level of failure is critical to the learning process and important for scientific development generally. Although cliché, “learning from our mistakes” is inevitably one of the most effective and quickest ways to learn and allowing ourselves to be imperfect, a little inaccurate or at time foolish is conducive to better science.

Allow yourself to stuff things up. You’ll do it way less in the future if you do.